Today, I want to share my experience on Exadata Cloud at Customer, aka ECC (or ECM :).

I want to share the things I have seen so far... ( I have 3 on-going ECC migrations at the moment)

Rather than giving the benefits about this machine ( I already did that) and this Cloud@customer named cloud model, I will concantrate on explaining the database deployment and patching lifecyle.

First, I want you to know the following;

- Oracle doesn't force us to use TDE for the 11.2.0.4 Databases, which are deployed into the ECC.

- Oracle recommends us to use TDE for the databases deployed in ECC.

- Oracle has Cloud GUIs delivered with ECC. (both for creating instances + creating databases..)

- Oracle Cloud GUIs in ECC can even patch the databases. (with PSUs and other stuff)

- Ofcourse, Customers prefer to use these GUIs. However, using these ECC machines as traditional Exadata machines is also supported. That is , we can download our RDBMS software and install it into ECC machines manually as well.

- We can create our databases using dbca (as an alternative to the tools in GUIs) -- We can deploy and patch our databases just like we do in a non-cloud Exadata machine. (at least currently..)

- ECC software is patched by Oracle. Both ECC and its satellite(OCC) are patched by Oracle in a rolling fashion. ( Having RAC instances gives us an advantage here)

- There is a new edition of Oracle Database it seems.. It is called Extreme Edition and I have only seen it in ECC machines)

- We can't reinstall GRID Home in ECC.. What we can do is to patch it.. ( if a reinstall is needed, we create SRs)

- When we have a problem, we create a SR using the ECC CSI and it is handled by the Cloud Team of Oracle Support.

- Oracle Homes and patches delivered by the ECC GUIs are a little different than the ones deployed with traditional methods.

- We see banners of Extreme edition in the headers of sqlplus and similar tools.

- Keeping the ECC software up-to-date is important, because there are little bugs in the earlier releases. (bugs like -> expdp cannot run parallel -- it says : this is not an Enterprise Edition database -- probably because of Extreme edition specific info delivered in ECC Oracle Homes)

So far so good. Let's put these things into your minds and continue reading.

The approach that I follow in ECC projects is simple.

That is; if you deploy a database using Cloud GUI, then continue using Cloud GUI.

I mean, if you create a database (and an Oracle Home) using Oracle Database Cloud Service Console, then patch that database using Cloud Service Console.

But if you install a database home and create a database using the standard approach (download Enterprise Edition software, use dbca etc..), then continue patching using the standard approach.

If you mix these 2 approaches, then you need to make a lot of efforts to make the things go right.

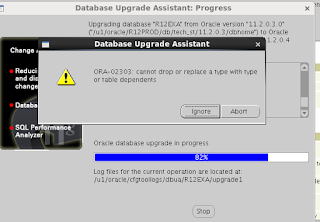

Yesterday, I was in a customer site and the customer reported that they couldn't run dbca successfuly to create their database in ECC.

They actually deployed the Oracle Home using Cloud Console, and then they tried to use dbca to create a database using that home. ( home is from GUI, database is from dbca)

The error they were getting during the dbca run was the following;

When I checked the dbca logs, I saw that DBCA was trying to create the USERS tablespace and dbca was trying to create it with TDE. (as a result of the encrypt_new_tablespaces parameter.. dbca was setting it to CLOUD_ONLY.. probably the templates were configured that way.) .

See.. Even the behaviour of the dbca is different, when it is executed from an Oracle Home deployed via ECC GUIs.

I fixed the error by customizing the database that dbca would create.. I made dbca not to create the USERS tablespace and then the error dissapeared.

After the database was created , I set set the encrypt_new_tablespaces parameter to DDL (as my customer wanted), and then they could even create new tablespaces without using TDE.

-- optionally, I could create a master key and leave the parameter as is. (CLOUD_ONLY)

Another interesting story was during a patch run.

Customer reported that they couldn't patch the database using GUI.

When I check the GUI, I saw that patch was seen there, but when I checked the logs of the ECC's patching tool, I saw the wget commands.. However; the links that wget commands were trying to reach broken..

The patching tools in ECC get the patches using wget automatically, and those patches are not coming from Oracle Support, they are coming from another inventory (a cloud inventory)

Anyways, as the links were broken, customer created a SR to cloud team to make them put related patches to the place they need to be.

Customer also wanted to apply a patch using the traditional way (opatch auto) into an Oracle Home which was created using GUI.

Actually, we patched the Oracle Home successfully using opatch auto , but then we encountered the error "ORA-00439: Feature Not Enabled: Real Application Clusters", while starting the database instances.

Note that, we downloaded the patch using the traditional way (from Oracle Support) as well.

"ORA-00439: Feature Not Enabled: Real Application Clusters" is normally encountered when oracle binaries are relinked using rac_off.

On the other hand, it wasn't the cause in this case.

I relinked properly, but the issue remained.

Rac was on! The related library was linked properly but ORA-00439 remained!

Then, I start to analyze the make file.. (ins_rdbms.mk), and found there an interesting thing there.

In ins_rdbms.mk , there were enterprise_edition tags and extereme_edition tags.. (other tags as well)

When I checked a little bit further, I saw that according to these tags, the linked libraries differ.

Then I realized that this patch that we applied was downloaded from Oracle Support.. (there is no extreme_edition there)

As for the solution, I relinked the binary using the enterprise_edition argument and the error dissapeared->

cd $ORACLE_HOME/rdbms/lib

make -f ins_rdbms.mk edition_enterprise rac_on ioracle

-- we fixed the error, but this home became untrustable .. tainted..

So, what is different there? The patch seems different right? What about the libraries? Yes there are little differences in libraries too..

What about the extreme_edition thing? This seems completely new..

So, again -> "if you deploy a database using Cloud GUI, then continue using Cloud GUI. I mean, if you create a database (and an Oracle Home) using Oracle Database Cloud Service Console, then patch that database using Cloud Service Console.

But if you install a database home and create a database using the standard approach (download Enterprise Edition software, use dbca etc..), then continue patching using the standard approach."

That's it :)