Last week, a TNS-12154 error was reported for one of the Exadata nodes.During the problem hours, as you may guess, Clients / Apps services could not establish their connections to the back end database server..

Okay , I directly jumped in to the first node, because the error TNS-12514 was reported for the connections towards the instance 1.

errno.h:

99 EADDRNOTAVAIL Cannot assign requested address

"TNS-00515: Connect failed because target host or object does not exist" was also saying the same think , but in Oracle's Language..

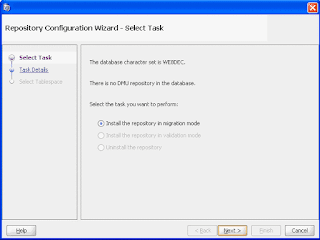

The crs_relocate command relocates applications and application resources as specified by the command options that you use and the entries in your application profile. The specified application or application resource must be registered and running under Oracle Clusterware in the cluster environment before you can relocate it.

A workaround would be to use the second node( by changing the dns) to reach the database, as In Exadata , we have at least 2 db nodes working in the RAC infrastructure.

Using a load balance-or-failover based tns would also help , but the issue was critic and needed to be fixed immediately.

Using a load balance-or-failover based tns would also help , but the issue was critic and needed to be fixed immediately.

In the problematic environment, EBS 11i was used as an Enterprise ERP application.. Number of Clients, which need to connect to the database was less than 10 and the clients and EBS tecstack were using the Tns entries based on virtual ip addresses of Exadata Database Machine Nodes.

As 11i could not use Scan listener, I was lucky :) This meant a decrease in things to check :)

Note that ; for scan listeners, youc an check the following http://ermanarslan.blogspot.com.tr/2014/02/rac-listener-configuration-in-oracle.html

Note that ; for scan listeners, youc an check the following http://ermanarslan.blogspot.com.tr/2014/02/rac-listener-configuration-in-oracle.html

Okay , I directly jumped in to the first node, because the error TNS-12514 was reported for the connections towards the instance 1.

Before going further, I want to explain the TNS-12514 /ORA-12514 error typically.

When you this error, you can think that your connection request is transferred to the listener, but the service name or sid that you have provided is not listened by the listener. In other words, the database service that you want to connect and specified in your connection request is not registered with the listener.

This may be a local_listener parameter problem, or it may be a problem directly caused by the listener process.. Okay. We will see the details in the next paragraph..

So, when I connect to the first node; I saw that listener was running. Actualy, It was normal, because the error was not an "No Listener" error. So, I directly restarted it.. I wanted to have a clean environment..

While starting the listener , I saw the following in my terminal.

<msg time='2014-10-26T10:54:16.302+02:00' org_id='oracle' comp_id='tnslsnr'

type='UNKNOWN' level='16' host_id='osrvdb01.somexampleerman.net'

host_addr='10.10.10.100'>

<txt>Error listening on: (DESCRIPTION=(ADDRESS=(PROTOCOL=TCP)(HOST=exa01-vip)(PORT=1529)(IP=FIRST)))

</txt>

</msg>

<msg time='2014-10-26T10:54:16.302+02:00' org_id='oracle' comp_id='tnslsnr'

type='UNKNOWN' level='16' host_id='exa01.blabla.net'

host_addr='10.10.10.100'>

<txt>TNS-12545: Connect failed because target host or object does not exist

TNS-12560: TNS:protocol adapter error

TNS-00515: Connect failed because target host or object does not exist

Linux Error: 99: Cannot assign requested address

Okay, the problem was there .. Especially the line ->

Error listening on: (DESCRIPTION=(ADDRESS=(PROTOCOL=TCP)(HOST=exa01-vip)

On the other hand this was not the reason behind the problem, it was the result of the problem.

The real causes were the following lines:

TNS-00515: Connect failed because target host or object does not exist

Linux Error: 99: Cannot assign requested address

So , this was the error stack, and as it is a stack,we can understand that the first error was Linux Error 99.

Linux Error 99 which came from the Exadata Compute Node's Operating System (it is Oracle Linux) means ;

99 EADDRNOTAVAIL Cannot assign requested address

Wow what a surprise! :)

I guess the Listener uses bind here ->

int bind (int socket, struct sockaddr *addr, socklen_t length)

And , it failed because , the specified address exa01-vip, was not available on this database node.

The code could be something like the following;

#include <sys/socket.h>

⋮

int rc;

int s;

struct sockaddr_in myname;

⋮

memset(&myname, 0, sizeof(myname));

myname.sin_family = AF_INET;

myname.sin_port = 1529;

myname.sin_addr.s_addr = inetaddr("192.168.0.91");

rc = bind(s, (struct sockaddr *) &myname, sizeof(myname)); /*virtual ip address of Node1*/ --> I guess at this point, the code should be broken with EADDRNOTAVAIL)

Okay..

After analyzing the above, I used ifconfig command to see whether the vip was there or not..

Yes.. The ifconfig command did not listed the vip..

Then I try to ping the vip .. The ping was okay. The ip was up , but not in its original node (node1 in this case).. So, it was normal to get an error while starting the associated listener ..

When I login to the 2nd node and run ifconfig, I saw that the vip interface of the 1st node is up in the 2nd node.

So my thoughts were true.. The vip of node 1 was not present in 1st node.. On the other hand; it was present in the 2nd node and this was the cause of this problem..

But why and how this vip interface was migrated to 2nd node?

To answer these questions; I first checked the messages of Linux running in Node1, and saw the following;

Oct 26 09:10:33 osrvdb01 kernel: igb: eth2 NIC Link is Down

Oct 26 09:10:33 osrvdb01 kernel: igb: eth1 NIC Link is Down

Oct 26 09:10:33 osrvdb01 kernel: bonding: bondeth0: link status down for idle interface eth1, disabling it in 5000 ms.

Oct 26 09:10:33 osrvdb01 kernel: bonding: bondeth0: link status down for idle interface eth2, disabling it in 5000 ms.

Oct 26 09:10:38 osrvdb01 kernel: bonding: bondeth0: link status definitely down for interface eth1, disabling it

Oct 26 09:10:38 osrvdb01 kernel: bonding: bondeth0: link status definitely down for interface eth2, disabling it

So the problem was obvious , there was a failure with the network links, which were related with the virtual interface..(Note that : the client was made a system operation and changed the switches :) , this was a result of that.. It was not Exadata's fault :))

So Linux in node 1 seems detected and disabled the bondeth0 interface because of this Link errors.

It was normal that disabling bondeth0 made the public ip and virtual ips of Node 1 to become unavailable on node1.. And as a result, they were migrated to 2nd nodes.. (This is Rac :))

Now, we came to a point to know the "thing" that migrated this vip interface from node 1 to node2.

To find this thing, I checked the RAC logs..

In listener log , which was located in Grid Home, listener was saying that "I m no longer listening from exa01-vip) This was true because, when I first checked the server, I saw the listener was up , but could not listen to the vip address ..

Then I check the crsd.log ;

In crs log, I saw the following;

Received state change for ora.net1.network exadb01 1 [old state = ONLINE, new state = OFFLINE]

So , it seems the crsd understood that the network related to the problematic interface became down..

I saw the restart attempts , too..

CRS-2672: Attempting to start 'ora.net1.network' on 'exadb01'

The attempts were failing..

CRS-2674: Start of 'ora.net1.network' on 'exadb01' failed

quencer for [ora.net1.network exadb01 1] has completed with error: CRS-0215: Could not start resource 'ora.net1.network'.

Then I saw the vip was failed over.. It was migrated from node 1 to node 2 as follows;

2014-10-26 10:21:56.371: [ CRSPE][1178179904] {0:1:578} RI [ora.exadb01.vip 1 1] new external state [INTERMEDIATE] old value: [OFFLINE] on ecadb02 label = [FAILED OVER]

2014-10-26 10:21:56.371: [ CRSPE][1178179904] {0:1:578} Set LAST_SERVER to exadb02 for [ora.exadb01.vip 1 1]

2014-10-26 10:21:56.371: [ CRSPE][1178179904] {0:1:578} Set State Details to [FAILED OVER] from [ ] for [ora.exadb01.vip 1 1]

2014-10-26 10:21:56.371: [ CRSPE][1178179904] {0:1:578} CRS-2676: Start of 'ora.exadb01.vip' on 'exadb02' succeeded

Okay.. But what was the advantage or benefit of this failover?, This question comes to minds as the clients still were not able to connect to PROD1 even if the corresponding vip was failed over & up on node2.

PROD =

(DESCRIPTION =

(ADDRESS=(PROTOCOL=TCP)(HOST=exavip1)(PORT=1521))

(ADDRESS=(PROTOCOL=TCP)(HOST=exavip2)(PORT=1521))

(CONNECT_DATA =

(SERVICE_NAME = PROD)

)

)

When I login to the 2nd node and run ifconfig, I saw that the vip interface of the 1st node is up in the 2nd node.

So my thoughts were true.. The vip of node 1 was not present in 1st node.. On the other hand; it was present in the 2nd node and this was the cause of this problem..

But why and how this vip interface was migrated to 2nd node?

To answer these questions; I first checked the messages of Linux running in Node1, and saw the following;

Oct 26 09:10:33 osrvdb01 kernel: igb: eth2 NIC Link is Down

Oct 26 09:10:33 osrvdb01 kernel: igb: eth1 NIC Link is Down

Oct 26 09:10:33 osrvdb01 kernel: bonding: bondeth0: link status down for idle interface eth1, disabling it in 5000 ms.

Oct 26 09:10:33 osrvdb01 kernel: bonding: bondeth0: link status down for idle interface eth2, disabling it in 5000 ms.

Oct 26 09:10:38 osrvdb01 kernel: bonding: bondeth0: link status definitely down for interface eth1, disabling it

Oct 26 09:10:38 osrvdb01 kernel: bonding: bondeth0: link status definitely down for interface eth2, disabling it

So the problem was obvious , there was a failure with the network links, which were related with the virtual interface..(Note that : the client was made a system operation and changed the switches :) , this was a result of that.. It was not Exadata's fault :))

So Linux in node 1 seems detected and disabled the bondeth0 interface because of this Link errors.

It was normal that disabling bondeth0 made the public ip and virtual ips of Node 1 to become unavailable on node1.. And as a result, they were migrated to 2nd nodes.. (This is Rac :))

Now, we came to a point to know the "thing" that migrated this vip interface from node 1 to node2.

To find this thing, I checked the RAC logs..

In listener log , which was located in Grid Home, listener was saying that "I m no longer listening from exa01-vip) This was true because, when I first checked the server, I saw the listener was up , but could not listen to the vip address ..

Then I check the crsd.log ;

In crs log, I saw the following;

Received state change for ora.net1.network exadb01 1 [old state = ONLINE, new state = OFFLINE]

So , it seems the crsd understood that the network related to the problematic interface became down..

I saw the restart attempts , too..

CRS-2672: Attempting to start 'ora.net1.network' on 'exadb01'

The attempts were failing..

CRS-2674: Start of 'ora.net1.network' on 'exadb01' failed

quencer for [ora.net1.network exadb01 1] has completed with error: CRS-0215: Could not start resource 'ora.net1.network'.

Then I saw the vip was failed over.. It was migrated from node 1 to node 2 as follows;

2014-10-26 10:21:56.371: [ CRSPE][1178179904] {0:1:578} RI [ora.exadb01.vip 1 1] new external state [INTERMEDIATE] old value: [OFFLINE] on ecadb02 label = [FAILED OVER]

2014-10-26 10:21:56.371: [ CRSPE][1178179904] {0:1:578} Set LAST_SERVER to exadb02 for [ora.exadb01.vip 1 1]

2014-10-26 10:21:56.371: [ CRSPE][1178179904] {0:1:578} Set State Details to [FAILED OVER] from [ ] for [ora.exadb01.vip 1 1]

2014-10-26 10:21:56.371: [ CRSPE][1178179904] {0:1:578} CRS-2676: Start of 'ora.exadb01.vip' on 'exadb02' succeeded

So, te failover was done by the Clusterware...

Okay.. But what was the advantage or benefit of this failover?, This question comes to minds as the clients still were not able to connect to PROD1 even if the corresponding vip was failed over & up on node2.

The purpose of this failover is to make the vip of node1 to be available on node2 . Thus, the connection attempts by the clients towards the node1's listener/vip encounter "Tns no listener" errors without waiting the TIME (TCP TIMEOUT)..

Ofcourse the clients should use a tns that supports this kind of failover; like the following;

(DESCRIPTION =

(ADDRESS=(PROTOCOL=TCP)(HOST=exavip1)(PORT=1521))

(ADDRESS=(PROTOCOL=TCP)(HOST=exavip2)(PORT=1521))

(CONNECT_DATA =

(SERVICE_NAME = PROD)

)

)

So by using a tns like above, clients will first go to the exavip , they will reach the vip and encounter errors immediately.. Then they will go to the second vip and connect to the database..

This is te logic of using Vips in Oracle Rac.

Okay. So far so good. We analyzed the problem , found the causes and saw the mechanism that have made the failover.. Now we will see the solution..

The solution I applied was as follows;

[root@exa02 bin]# crs_relocate ora.exadb01.vip

Attempting to stop `ora.exadb01.vip` on member `exadb02`

Stop of `ora.exadb01.vip` on member `exadb02` succeeded.

Attempting to start `ora.exadb01.vip` on member `exadb01`

Start of `ora.exa01.vip` on member `exadb01` succeeded.

Attempting to start `ora.LISTENER.lsnr` on member `exadb01`

Attempting to start `ora.LISTENER_PROD.lsnr` on member `exadb01`

Attempting to stop `ora.exadb01.vip` on member `exadb02`

Stop of `ora.exadb01.vip` on member `exadb02` succeeded.

Attempting to start `ora.exadb01.vip` on member `exadb01`

Start of `ora.exa01.vip` on member `exadb01` succeeded.

Attempting to start `ora.LISTENER.lsnr` on member `exadb01`

Attempting to start `ora.LISTENER_PROD.lsnr` on member `exadb01`

Start of `ora.LISTENER_PROD.lsnr` on member `exadb01` succeeded.

So , I basically used the crs_relocate utility...

Here is the general definition of the crs_relocate utility.

That is it.

In this article, we have seen a detailed approach for diagnosing network errors in Exadata (actually in RAC)..

We have seen the Vip failover, and the logic of using Vips in RAC.

Lastly we have seen the crs_relocate utility to migrate the vip to its original location/node.

I hope you will find it useful.. Feel free to comment.

In this article, we have seen a detailed approach for diagnosing network errors in Exadata (actually in RAC)..

We have seen the Vip failover, and the logic of using Vips in RAC.

Lastly we have seen the crs_relocate utility to migrate the vip to its original location/node.

I hope you will find it useful.. Feel free to comment.