Migrations are popular these days, just like they always were.

Cloud migrations , Oracle migrations, Sql Server migration, Data Center migrations, Cloud at Customer migration etc.. you name it :)

Today, I want to share a migration approach that can be taken in order to migrate data from Sql Server to Oracle.

I prefer using the term data rahter than the term database, because this database term is used a little different in Sql Server , a little different than it is used in Oracle world.

Anyways;

Sql Server to Oracle migrations can be done in several ways. There are tools which can be purchased. Like this good one:

striim ->

www.striim.com

Migrating Sql Server to Oracle is mostly a logical migration work, right? You can even write your own tool to migrate your data from Sql Server to Oracle.

In this post, however; I will give you an approach , which is based on using Oracle Sql Developer 19.2.

This tool has great features, and it is fun to play with them..

In this post; Sql Developer's Migration Wizard & Copy to Oracle features will be on our focus, while going through the migration process.

Let's see our source and target environments:

Quite realistic right? :) 11.2.0.4 customer should consider upgrading their databases, to a supported version , a long term supported one maybe 19C.. Anyways, we usually don't migrate the database and upgrade it at the same time, so let's not lose our focus.

As I mentioned, migration operations are performed using the SQL Developer as the Migration Tool. We run Sql Developer on Linux, but it can be run on Windows too...

Sql Developer does this migration task in 3 steps...

Normally, all of these 3 steps can be performed ONLINE.

However; in our case, GENERATE and DATA MOVE steps are performed OFFLINE..

This is done on purpose bytheway..

We just needed to interfere the process, as some of the table formats and most of the time the naming that is used in source, couldn't directly be migrated to Oracle. So we needed to do some manual work by stopping the Sql Developer migration and then by letting it resume, once we complete performing our manual actions.

PREREQUISITIES

- Install SQL DEVELOPER (19.2.0) for Linux x86-64 Download the SQL Developer from the following link.

https://download.oracle.com/otn/java/sqldeveloper/sqldeveloper-19.2.1.247.2212.noarch.rpm

- Install the SQL Developer via root user.

rpm -ivh sqldeveloper-19.2.1.247.2212.noarch.rpm

- Download the jar file from the link below and follow the steps.

https://sourceforge.net/projects/jtds/

- Add jar to SQL Developer as follows;

SQL Developer > Tools > Preferences > Database > Third Party JDBC Drivers > Add Entry

- Connect to SQL Server Database as a privileged user;

Add connection information as follows to “SQL Developer > File > New Connection “

Name : SQL

Username : user

Password : password

Hostname : ermanserver1

Port : 1433

- Create Oracle Database Migration Repository

A database migration repository user/schema is created on target Oracle Database so that we can perform all data migration steps

CREATE USER MIGREP IDENTIFIED BY MIGREP DEFAULT TABLESPACE USERS TEMPORARY TABLESPACE TEMP;

ALTER USER MIGREP QUOTA UNLIMITED ON USERS;

GRANT ALTER ANY ROLE, ALTER ANY SEQUENCE, ALTER ANY TABLE, ALTER TABLESPACE, ALTER ANY TRIGGER, COMMENT ANY TABLE,CREATE ANY SEQUENCE,CREATE ANY TABLE,CREATE ANY TRIGGER,CREATE ROLE,CREATE TABLESPACE,CREATE USER, DROP ANY SEQUENCE, DROP ANY TABLE, DROP ANY TRIGGER, DROP TABLESPACE, DROP USER, DROP ANY ROLE, GRANT ANY ROLE, INSERT ANY TABLE, SELECT ANY TABLE, UPDATE ANY TABLE, DBA TO MIGREP;

GRANT ALL PRIVILEGES,CREATE ROLE , ALTER ANY TRIGGER,CREATE USER, CONNECT, RESOURCE, CREATE VIEW, CREATE MATERIALIZED VIEW, CREATE PUBLIC SYNONYM TO MIGREP WITH ADMIN OPTION;

- Associate Migration Repository

After the migration repository user is created, we establish our Oracle Database connection via MIGREP schema using the SQL Developer. After that, we associate our migration repository by clicking on the Associate Migration Repository button, as shown below;

MIGRATION

Migration consists of 3 steps: Capture, Generate and Data Move.

CAPTURE

In Capture step , we connect both to the SQL Server Database and to the Oracle Database via Sql Developer. After establishing our connections, we start our capture by clicking the migrate button as shown below. -- As a result, we get a master sql created. This master sql is used to create the database objects in the target Oracle Database, in the next step.

"SQL Developer Tools > Migration > Migrate"

The migration wizard requests some information for the capture phase.

As shown below, we populate the related screens and start our migration;

Specify a name and directory for the migration project... Migration files are located on this directory.

Source Database - Select Online Mode for Source Database (SQL Server)

Choose the schema to be migrated. e.g. INTERFACE schema

Conversion Matrix ; in Convert Menu we can modify the data type conversion matrix. Although , Oracle Data Type Column is modifiable; it is okay to continue with the default options (at least, it was okay in our case).

However; we need to modify some modifiables under the advanced options tab. So we click the Advanced Options button..

In File Creation Options, we select "One Single File option."

In General Options, we uncheck "Generate Comments, Latest Privilege Schema Migration, Generate Failed Objects".

We check "Generate Stored Procedure for Migrate Blobs Offline, Create Users, Generate Separate Emulation Package".

We also uncheck “Target Oracle Database 12c” as our Target database version is 11g.

Object Types: In our case we move only the data, the tables, so we check “Tables“ only.

Identifier Options : In Identifier Options window, we check only the checkbox named “Microsoft SQL Server: Is Quoted Identifier On“.

Translators: We uncheck all Oracle Database 12c Features.

Translate: SQL Objects : We move all the Sql Objects to the left side (In our case we migrate only the tables to the target system.)

Target database: Online generation doesn't really work for us, therefore we select the offline mode for the GENERATE step. It is mainly because, we need to modify the generated script (when necessary) and run it manually.

Move Data: As we select the Offline mode for the GENERATE Step, we select the offline mode again here, normally.

Summary: We review the migration

summary and continue..

Editing and Running the "Master.sql":

After completing Migration Wizard, a master.sql is created in the Project Directory. (Note that, we defined the Project directory before, in the relevant migration wizard screen)

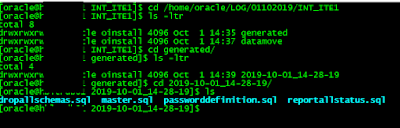

The Project directory contains folders named “generated” and “datamove”. The "master.sql" is created in the "generated” folder.

The master.sql is the one that is used for creating the users/schemas/objects in the target Oracle Database.

We execute this

"master.sql" manually, after we change the password with the new passwords we prefer.

We just run the master.sql using the sqlplus.. If any errors are received when running master.sql script in SQL Developer,

these errors should be carefully examined.

What do we modify in master.sql? Well it depends.. We replace the turkish characters used in table and column names. Basically this kind of stuff.. We edit the master.sql in a way to make the DDL statements inside of it be compatible with Oracle.. (Note that, our target sytem version (Oracle Database version) was 11.2.0.4)

GENERATE:

After the capture step, we continue with the Generate step.

In Generate step, we need to install SQL Server sqlcmd and bcp command-line tools on Oracle Linux 6 (in our case, the host that we run Sql Developer was an OEL 6).

After these steps are completed, we run "MicrosoftSQLServer_data.sh" script on our host.

The script exports data from the source database to several files.

Note that, before running this script, the Linux environments "LANG" and "NLS_LANG" must be defined properly in our shell.

Installing SQL Server sqlcmd and bcp Utility:

sudo su

curl https://packages.microsoft.com/config/rhel/6/prod.repo > /etc/yum.repos.d/mssql-release.repo

exit

sudo yum remove unixODBC-utf16 unixODBC-utf16-devel #to avoid conflicts

sudo ACCEPT_EULA=Y yum install msodbcsql

# optional: for bcp and sqlcmd

sudo ACCEPT_EULA=Y yum install mssql-tools

echo 'export PATH="$PATH:/opt/mssql-tools/bin"' >> ~/.bash_profile

echo 'export PATH="$PATH:/opt/mssql-tools/bin"' >> ~/.bashrc

source ~/.bashrc

# optional: for unixODBC development headers

sudo yum install unixODBC-devel

Running “MicrosoftSQLServer_data.sh

export LANG=tr_TR.ISO-8859-9 (in our case)

export NLS_LANG=Turkish_TURKEY.WEISO8859P9 (in our case)

cd /home/oracle/LOG/01102019/INT_ITE1/datamove/2019-10-01_14-28-24

./MicrosoftSQLServer_data.sh <ip_address_of_sqlserver> <user> <password>

DATA MOVE:

Once the generate step is completed, we have the data exported from Sql Server in several files located in the host that we run our Sql Developer on.

In the Data Move phase, we import this data to Oracle.

Generation uses sqlcmd and bcp utilities to export the data from Sql Server.

Data Move uses sqlldr (SQL Loader) to import this data to Oracle.

In the Data

Move step, we copy all the files to the target database host, and execute the oracle_loader.sh from there.

Before

running this script, Linux environment variables "LANG" and

"NLS_LANG" must be defined properly in our terminal.

Running oracle_loader.sh

export LANG=tr_TR.ISO-8859-9 (in our case)

export NLS_LANG=Turkish_TURKEY.WEISO8859P9 (in our case)

cd /home/oracle/LOG/01102019/INT_ITE1/datamove/2019-10-01_14-28-24

./oracle_loader.sh <db_name/TNS_Entry_of_oracle> <user> <password>

--note that we run this oracle_loader.sh script from a host where an ORACLE_HOME is installed. (recommended : from the target database host). That 's because -> when Oracle Home is installed, we get our Sql loader installed as well. Note that, we also populate the tnsnames.ora file in that Oracle Home with the TNS_ENTRY of our target Oracle database. (as you may already saw in the example command above, we use the TNS_ENTRY_of_oracle as the first command line argument, while runnning the oracle_loader.sh)

COMPARISON:

After the data move, we can say that our migration is completed. But we need to compare the data. We need to ensure that , the data stored in the source (Sql Server) is properly migrated to target (Oracle)..

So, after completing the migration steps, we must compare metadata and data between

source and target databases.

Compare Table Count

We compare the table count with the following sql queries.

SQL Server Table Count SQL

SELECT Count(*)

FROM erman_db.information_schema.TABLES

WHERE table_type = 'base table'

Oracle Table Count SQL

SELECT Count(owner) AS "TABLE COUNT"

FROM dba_objects

WHERE owner LIKE ( '%ERMAN_DB' )

AND object_type = 'TABLE';

Compare Row Count

We compare the row count of the migrated tables with the following sql queries.

SQL Server Table Row Count SQL

SELECT Sum([partitions].[rows]) AS [TotalRowCount],

[tables].NAME AS [TableName],

Schema_name(schema_id) AS [SchemaName]

FROM sys.tables AS [Tables]

JOIN sys.partitions AS [Partitions]

ON [tables].[object_id] = [partitions].[object_id]

AND [partitions].index_id IN ( 0, 1 )

GROUP BY Schema_name(schema(schema_id), [tables].NAME

Oracle Table Row Count SQL

SELECT num_rows,

table_name,

owner

FROM dba_tables

WHERE owner LIKE ( '%ERMAN_DB' )

ORDER BY num_rows DESC;

KNOWN ISSUES:

In our tests, we saw some cases where some of the tables could not be migrated directly by using the Sql Developer's Migration Wizard.

They could not be migrated due to errors that Sql Developer or Sql Loader(in the background) got during the migration process.

The errors that we recorded during our tests were listed below;

ERROR : SQL*Loader-466: Column PROMPT does not exist in table XXXX.

ERROR : 2 Rows not loaded because all fields were null. (XXX)

ERROR : 74 Rows not loaded because all fields were null.

ERROR : SQL*Loader-350: Syntax error at line 4. Illegal combination of non-alphanumeric characters into table XXXX

ERROR : Record 71: Discarded - all columns null.

ERROR : ORA-01400: cannot insert NULL into (XXX.XXX)

ERROR : Error on table XXXX, column YYYY

ERROR : SQL*Loader-500: Unable to open file (XXX )

ERROR : SQL*Loader-553: file not found S

ERROR : SQL*Loader-509: Systemerror:No such file or directory

ERROR : SQL*Loader-2026: the load was aborted because SQL Loader cannot continue.

ERROR : Field in data file exceeds maximum length

ERROR : Rejected - Error on table XXX, column YYY. Field in data file exceeds maximum length

Actually, by looking at the error messages, we can directly tell the causes. Actually, these causes may be fixed sometimes by editing the SQL Loader files or by taking some actions in the source (in Sql Server side).. However; we didn't take any manual actions for fixing these errors.

What we have done is, we just used the "Copy to Oracle" method for the failing tables, and it worked.

We dropped the failed tables(which contains rows in the target) from the target and used Copy to Oracle to re-migrate them.

We didn't drop the failed tables(which doesn't contains any rows in the target) from the target and used Copy to Oracle to re-migrate them directly.

Somehow, the Copy to Oracle, which uses sqlcl in the backend, could migrate these kinds of table data properly. This may also mean that "Copy to Oracle" feature of Sql Developer is a little more stable than the Migration Wizard :)